(aka the half-closed connection problem)

The problem described in this page can affect not only LDAP clients but also several other kind of network applications. I’m explaining this issue using LDAP as example because this can easily happen with LDAP Java clients, due to default settings used by standard classes.

So, if you are here, probably you have just set up a web application that uses a Java library to connect to an LDAP server and all seems working good… but when you access again that application some hours later it remains stuck for 15 endless minutes!

Ok, don’t panic. There is a firewall between you and the LDAP server? I think so.

I quote a very useful information from a TCP Keepalive HOWTO:

It’s a very common issue, when you are behind a NAT proxy or a firewall, to be disconnected without a reason. This behavior is caused by the connection tracking procedures implemented in proxies and firewalls, which keep track of all connections that pass through them. Because of the physical limits of these machines, they can only keep a finite number of connections in their memory. The most common and logical policy is to keep newest connections and to discard old and inactive connections first.

How to solve the problem

Basically, the solution is to set up a proper keep alive on the connection. You have 3 options:

1. Check your library settings

If you are using a good library it probably supports some keep alive settings. RTFM

2. Modify the source

A typical way to create an LDAP client is passing to the InitialDirContext constructor a Hashtable with the desired settings

Usually the class com.sun.jndi.ldap.LdapCtxFactory is used. If you look at the source code you can see that, by default:

- If you have set the property

Context.SECURITY_PROTOCOLto “ssl” it tries to create aSocketusing aSSLSocketFactory:- first it tries to create a

SSLSocketFactoryusing the propertyjava.security.Security.getProperty("ssl.SocketFactory.provider") - if that property is null it tries to obtain the factory from

SSLContext.getDefault().getSocketFactory()

- first it tries to create a

- Otherwise, if you aren’t using SSL, a new

Socketis created inside the com.sun.jndi.ldap.Connection class.

Generally, by default, these sockets don’t have the keep alive setting activated.

Java allows you adding keep alive to a socket simply using:

socket.setKeepAlive(true);

So you can create your custom SocketFactory implementation and add it on the Hashtable:

env.put("java.naming.ldap.factory.socket", "mypackage.MyKeepAliveSocketFactory");

I put on a GitHub Gist a factory for non SSL usage.

3. Use the libdondie library

If you don’t have the source code or you can’t modify it you can install the libdontdie library and load it before running your Java application.

libdondie in Tomcat

If your client run inside Tomcat you can add the following lines to $CATALINA_HOME/bin/setenv.sh:

export DD_DEBUG=1

export DD_TCP_KEEPALIVE_TIME=300

export DD_TCP_KEEPALIVE_INTVL=60

export DD_TCP_KEEPALIVE_PROBES=5

export LD_PRELOAD=/usr/lib/libdontdie.so

Simulating the problem

I tried reproducing the issue on my LAN: 192.168.0.2 is my server and 192.168.0.6 is my client.

Client uses the following code:

public class FooLdapClient {

public static void main(String[] args) throws NamingException {

Hashtable env = new Hashtable();

env.put(Context.INITIAL_CONTEXT_FACTORY, "com.sun.jndi.ldap.LdapCtxFactory");

env.put(Context.PROVIDER_URL, "ldap://192.168.0.2:389");

DirContext ctx = new InitialDirContext(env); // connection created

// Set up hook for closing connection on Ctrl+C

Runtime.getRuntime().addShutdownHook(new Thread() {

@Override

public void run() {

System.out.println("\nClosing connection");

try {

ctx.close();

} catch (NamingException e) {

}

}

});

while (true) {

Scanner reader = new Scanner(System.in);

reader.next(); // waiting for user input

// performing a search

Attributes attrs = new BasicAttributes();

attrs.put(new BasicAttribute("cn"));

NamingEnumeration e = ctx.search("dc=zonia3000,dc=net", attrs);

int count = 0;

while (e.hasMore()) {

e.next();

count++;

}

System.out.println("Found " + count + " results");

e.close();

}

}

}

The client keeps opened a connection with the LDAP server and makes a new search request on each user input.

The existence of connection can be checked using the command netstat -o.

This command produces:

-

On the client:

Proto Recv-Q Send-Q Local Address Foreign Address State Timer tcp6 0 0 192.168.0.6:48032 192.168.0.2:ldap ESTABLISHED off (0.00/0/0) -

On the server:

Proto Recv-Q Send-Q Local Address Foreign Address State Timer tcp 0 0 192.168.0.2:ldap 192.168.0.6:48032 ESTABLISHED keepalive (297,66/0/0)

Note the client has timer “off” while the server has it set on “keepalive”.

Let’s do a joke to our poor client, adding this rule to the server firewall:

iptables -A OUTPUT -p tcp --sport 389 -j DROP

Now, if we shutdown the LDAP server it will send a FIN, ACK TCP packet to properly close its connection, but that packet will be dropped by the firewall and the client will never see it. This create a “half-closed connection”: the server has closed it but the client still sees it in the ESTABLISHED state.

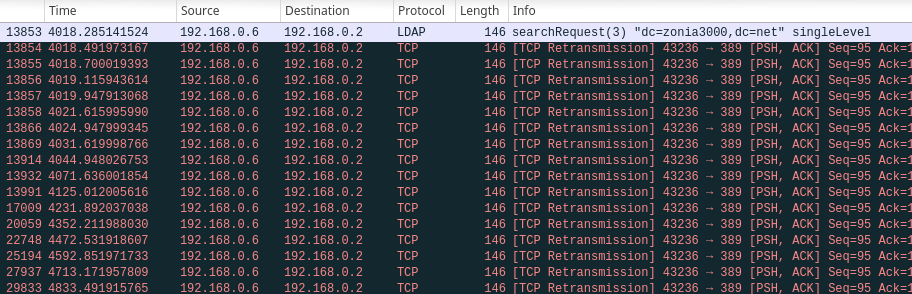

This is what it can be seen using Wireshark when the client makes a new request:

A long sequence of TCP Retransmission packets that can last up to 15 minutes. According to Linux TCP manual this should depend by tcp_retries2 settings:

The maximum number of times a TCP packet is retransmitted in established state before giving up. The default value is 15, which corresponds to a duration of approximately between 13 to 30 minutes, depending on the retransmission timeout.

You can see the value set on your machine using:

cat /proc/sys/net/ipv4/tcp_retries2

You can see the connection stuck also with netstat -o:

Proto Recv-Q Send-Q Local Address Foreign Address State Timer

tcp6 0 80 192.168.0.6:42240 192.168.0.2:ldap ESTABLISHED on (12,68/6/0)

Note the presence of 80 bytes in Send-Q (this means an outgoing packet hasn’t received an ACK from the server yet) and the activation of a timer (for the TCP Retransmission).

When the issue is solved

Starting the client activating the libdontdie we can see it activates the keep alive:

Proto Recv-Q Send-Q Local Address Foreign Address State Timer

tcp6 0 0 192.168.0.6:43020 192.168.0.2:ldap ESTABLISHED keepalive (1,64/0/0)

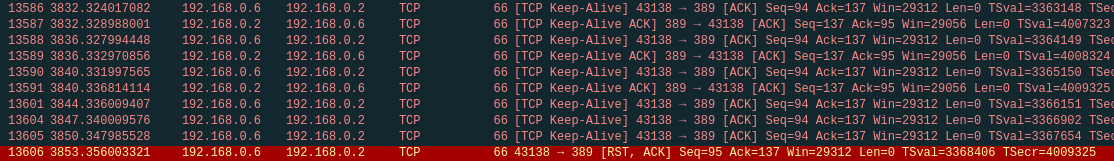

Repeating the iptables trick this is what we can see using Wireshark:

While the connection is working correctly the client periodically send a “Keep-Alive” packet to the server and the server responds.

When the connection became half-closed the server doesn’t respond anymore to client packets and, after some of “Keep-Alive” packets don’t receive an answer, the connection is reset.